[This blog was originally published in November of 2019 and updated with new content in May of 2021.]

Whether you are a software architect, a developer, or a DevOps engineer, integrating your application into the cloud, understanding the constructs of the best cloud provider for your needs, and adopting the latest technologies is probably already your day-to-day. But when the rubber hits the road, modeling your application in the cloud can be a time-consuming, error-prone process. And quite frankly, Using Infrastructure as Code to build every necessary environment individually is not the most judicious investment of resources to achieve your organization’s digital transformation goals.

In this article, we will review and contrast a traditional, Infrastructure as Code approach using Terraform, with an alternative, time-saving infrastructure automation approach using environment blueprinting with Torque.

Traditional Infrastructure as Code Approach to Model an Application in the Cloud

In a typical implementation of the well documented load-balanced web server, a series of Terraform commands are used to compose an infrastructure layer of an application running on NGNIX server in AWS. The finished product is a Terraform file and a cloud-specific plan that can be reused to deploy the environment repeatedly.

To compose the application’s infrastructure layer, the following resources in AWS will have to be defined and created:

- A VPC that creates an isolated environment

- An Internet Gateway to connect the VPC to the web

- An internet facing Application Load Balancer to access the web servers

- Public subnets for NAT GW’s and Bastion ASG’s

- Private subnets for Web Server ASG’s

- NAT GW’s for web servers in the private subnet to access the web

- ASG’s running ec2 configured with NGINX

- ASG running ec2 configured with Ubuntu (as our Bastion)

- 3 security groups: one for the load balancer, one for the web servers and one for the bastion host, respectively.

The list above is a good example for implementing some of AWS best practices when building a simple web application in AWS. All those resources and dependencies are quite complex and hard to maintain. Furthermore, they would only cover the front-end component of a real-world business application. If we now consider more complete architectures to model end-to-end inter-related services, maintain them, troubleshoot them, expand them in the future and integrate them with other cloud services, the complexity quickly becomes overwhelming.

Using this approach, modeling and automating your application environment for a cloud-ready architecture requires a significant investment in a specific technology, in both time and resources.

An alternative time-saving approach

Let’s consider an alternative approach that would enable a modern cloud application to be modeled in a fraction of the time, using a building block approach and out of the box automation.

Torque is an Infrastructure Automation platform. It connects to your public cloud accounts (e.g., AWS, Azure, Kubernetes) and automates cloud environment provisioning and deployment, throughout your release pipeline. In practice, it enables users to focus on business goals rather than on the cloud provider itself. Torque is functions using blueprints describing your environments based on building blocks called applications, as well as their related infrastructure component.

Let’s use Torque to create the same NGINX web application created with Terraform and compare this method to our previous approach.

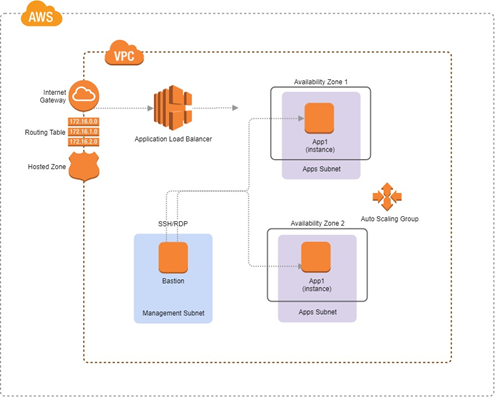

Torque will automatically provide all the infrastructure blocks needed on the cloud-provider side for the best performance and security. Here is a partial description of the out-of-the-box deployment in this scenario, using the AWS cloud infrastructure:

- Isolated VPC connected to an Internet gateway and a hosted zone configuration.

- Applications subnets in two availability zones.

- Auto Scaling Group with two EC2 instances of our web application behind an application load balancer.

- Management subnet for a Bastion machine open to RDP/SSH traffic for easy debugging of the sandbox applications.

In order to compose our application, we will use the Bitnami NGINX Open Source Stack as our web application.

Here is what a blueprint designer would need to define to support this scenario, and what would be automatically covered by the automation:

| What we need to define | Cloud specific infra we don’t need to worry about |

| Base image to use for the application | VPC |

| How to configure the app | Subnets |

| How to health check the app | Security Groups |

| Which ports to expose and to whom (public/private) | Auto-scaling groups |

| What inputs or artifacts does my app need? | Load balancers |

| What are the scaling requirements? | Bastion access and management DNS |

The process to create a blueprint modeling the NGNIX application is the following:

- Create a YAMLfile called “nginx.yaml” and add the basic metadata details.

- Define os_type: Linux and the image. To deploy on AWS, we will add the AMI id, the region and the machine username that will allow direct access for debugging later on.

- Under the infrastructure section we added instance_type and the port we would like to expose to the outside world once the deployment of our application is done.

- We added a health-check under the ‘configuration’ section that will check our application port is up after the deployment.

Finally, we add the application to our environment blueprint. The blueprint’s YAML defines a set of applications and their dependencies and provides a way to define the required infrastructure and application parameters.

The result is a very simple construct that provides the same result as the traditional Terraform-based template for a fraction of the work: 30 lines of code vs. 200. As an added bonus, it includes a built-in health check.

In summary, the list of 9 tedious configuration tasks we mentioned in the first section of this blog will be entirely automated by Torque.

The story doesn’t end there. Torque provides a fully-managed platform to manage these blueprints “as a service”. That means governance, access control policies, multi-tenancy and full environment lifecycle for each stage of the DevOps release, from development to test to production. Taken together, the features available in Torque result in significant cost savings, a dramatic increase in efficiency, and heightened governance over cloud environments compared to using Infrastructure as Code alone.