Optimize How You Build & Run GPU Stacks for AI Solutions

Quali Torque streamlines delivery and automates basic tasks to maximize performance and optimize costs for GPUs and other infrastructure supporting your AI solutions.

Don’t Let Complexity Hold Back

Your AI Performance

Unlock velocity with an intuitive, Gen AI-powered experience for building, running, and maintaining your GPU stacks.

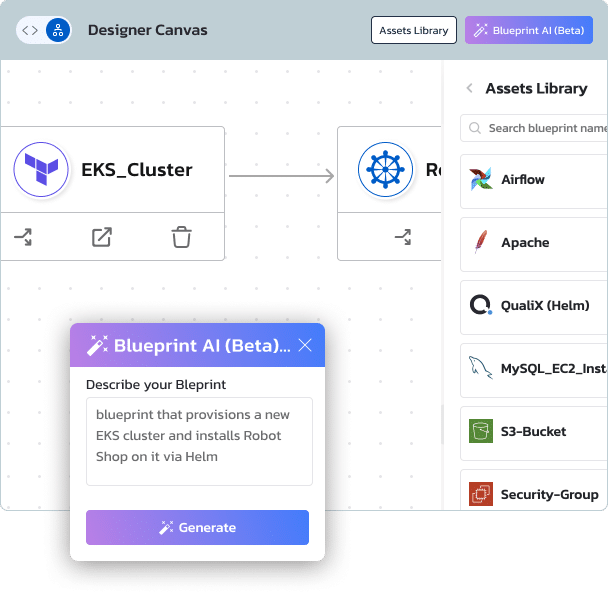

AI-Generated Blueprints

Submit natural-language AI prompts to create a blueprint defining the code needed to deploy GPUs, data services, and any other resources needed to run your AI solution.

Dynamic GPU Scaling

Ensure capacity while avoiding costly over-provisioning with automation to scale GPUs dynamically in response to workload needs.

Continuous Optimization

Automate actions, enforce guardrails, and reconcile drift and other errors proactively so your teams can spend less time on debugging and maintenance.

See How Quali Torque Supports AI Workloads

Simplify how you manage AI workloads with automation and an intuitive user experience.

Ready to learn more? Book a demo to go deeper

Browse Documentation on Torque’s Support for AI Workloads

Quali Torque Manages the Entire Lifecycle of Your AI Infrastructure

Easy-to-Use Infrastructure Modules

Torque creates interchangeable “building blocks” defining the resources needed to run your Kubernetes clusters and other infrastructure assets, eliminating the learning curve and accelerating the creation of your GPU environments.

AI Infrastructure Orchestration

Torque’s AI Copilot leverages the users’s inventory of infrastructure modules to design an environment and define the code needed to provision AI workloads based on the user’s natural-language AI prompts.

Self-Service Access

After creating the blueprint defining the code to run an AI workload, Torque executes that code to deliver the AI solution in an intuitive, self-service interface where data scientists, researchers, and other end users can access it on-demand.

Dynamic GPU Scaling

Torque continuously monitors the state of the environment supporting your AI solution and automatically scales GPUs to align with workload needs. For example, Torque will scale GPUs up to provide capacity for resource-intensive phases such as training, then scale GPUs down to prevent costly over-provisioning for less resource-intensive phases such as inference.

Continuous Optimization

With visibility into the state of the environment, Torque can automate actions on live infrastructure, enforce custom cloud governance policies, and alert users about infrastructure errors or configuration drift proactively.

Frequently Asked Questions

Torque simplifies the creation of the tech stack supporting AI, then manages the lifecycle of that tech stack.

This makes it easier to build, run, and maintain environments at scale.

Torque treats infrastructure as managed, stateful environments. For AI solutions, this can include everything from AI Agents and LLMs to cloud-based data services, GPUs, and other infrastructure.

Torque automatically creates a “blueprint” defining the code for the GPU layer supporting the AI solution. This works by discovering Infrastructure as Code (IaC) modules in the user’s repositories, inspecting the resource configuration, and creating a new version in the platform defined in YAML.

To eliminate complex, time-consuming coding, Torque’s AI Copilot allows users to submit natural-language prompts describing the infrastructure they need and how it should be configured. Based on those prompts, Torque leverages the user’s resources to design the environment and generate the code needed to provision it. Once ready, the user can save this code as a single blueprint.

Some users have leveraged this approach to create blueprints defining all the GPUs, data services, and other infrastructure needed to support the workload, which the platform can then execute, monitor, and adjust.

For example, an AI solution powered by Torque can automate the execution of routine but critical tasks like training and data quality assurance, then automatically scale GPUs up and down to provide the capacity needed to deliver those tasks.

This ensures the AI solution has the infrastructure needed to support any given phase of the lifecycle, while also preventing costly over-provisioning for tasks that require fewer resources.

To learn more, book a demo with our team.

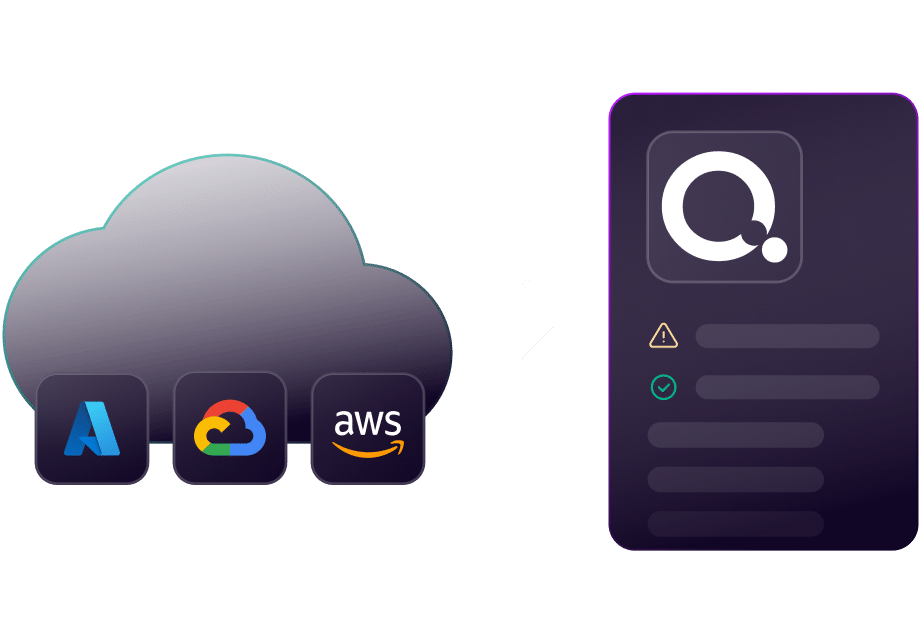

Both! Torque treats all infrastructure equally, regardless of where it’s hosted. This simplifies orchestration, delivery, and maintenance to reduce the time and budget spent on GPU infrastructure supporting AI solutions.

No, but Torque supports Infrastructure as Code. This includes:

- Accelerating the creation of IaC files by discovering and automatically codifying the resources discovered via the user’s AWS and Azure accounts.

- Discovering and leveraging any of the user’s IaC files from the user’s repositories to automate the creation of Environment as Code files that can be managed through the platform. This includes an AI Copilot that automatically orchestrates resource from the user’s IaC modules into Environment as Code blueprints that can be deployed and maintained continuously.

- Enforcing cloud policies and role-based access controls to deny the provisioning of any infrastructure that violates the user’s standards for security or costs.

- Mapping any IaC module in the user’s repositories to the active environments, inactive environments, and blueprints managed via the user’s Torque account. This helps to provide an accounting of resources available while also enabling users to anticipate how any updates will affect their colleagues prior to committing them.

- Tracking all resource deployments, updates, configuration drift, errors, and other activity to optimize reliability, productivity, and costs for infrastructure as code

To understand how Torque supports Infrastructure as Code, watch this demo.

Torque users can leverage any number of agents to deploy their GPU infrastructure, including AWS, Microsoft Azure, Nvidia DGX, Nebius, Coreweave, Oracle Cloud Infrastructure, and Kubernetes.

For questions about support for GPUs, book a demo with our team.