Unlock the Value of Agentic AI with Streamlined Management for Your Entire Stack

Quali Torque simplifies the adoption of Agentic AI by creating a stateful, managed environment to optimize all infrastructure, data services, LLMs, and other resources continuously.

Accelerate Adoption of Agentic AI with Streamlined Delivery of Your Environments

Agentic AI requires tight integration among infrastructure, data services, LLMs, microservices, and other resources continuously.

Simplified Delivery

Create a single environment managing the orchestration of all infrastructure, data, LLMs, and other resources needed to deliver your AI Agents.

Automation & Governance

Enforce custom policies and automate routine tasks required for critical phases of the AI lifecycle so your teams can spend less time on governance and maintenance.

Real-Time Visibility

Monitor the state of your AI resources so your teams can prevent issues, correct provisioning errors, and reconcile drift proactively.

Watch a Demo to See How Quali Supports Agentic AI

This brief video shows how Torque manages each layer of the tech stack support Agentic AI solutions.

Ready to learn more? Book a demo to go deeper

Browse Documentation on Torque’s Support for AI Workloads

Quali Torque Manages Your AI Agents as Stateful Environments

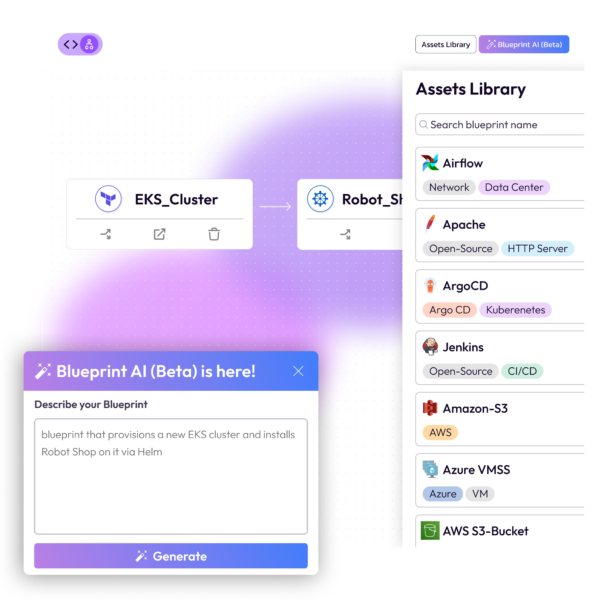

AI-Powered

Environment Design

In response to user-submitted prompts, Torque’s AI Copilot designs environments and defines the code needed to provision any layer of the Agentic AI stack, based on the user’s existing automation assets and scripts.

Full-Stack Blueprints for

Agentic AI

Torque creates a blueprint for each component of an Agentic AI solution, automates how those blueprints are maintained, and controls how they interact with each other to deliver the outcomes that users expect.

Continuous Monitoring & Optimization

Torque streamlines the maintenance of Agentic AI solutions by automating routine tasks like training, enforcing custom policies on infrastructure usage, and notifying users about configuration drift, provisioning errors, and other unexpected changes to the code supporting the Agent.

Dynamic GPU Scaling

Torque continuously monitors the state of the environment supporting your AI solution and automatically scales GPUs to align with workload needs. For example, Torque will scale GPUs up to provide capacity for resource-intensive phases such as training, then scale GPUs down to prevent costly over-provisioning for less resource-intensive phases such as inference.

Visibility with Context

Since Torque manages the state of the environment supporting an Agentic AI solution, the platform can track all updates and actions performed on the tech stack, with the context to show the user who initiated it and the changes they made to the state of the resource configurations.

Frequently Asked Questions

Torque manages the entire lifecycle of the tech stack support Agentic AI solutions. Torque accomplishes this with its Environment as Code approach to managing infrastructure, data, and other resources.

Torque’s AI Copilot will automatically design the environment for each layer of the AI tech stack: infrastructure (such as GPUs), data services, models (such as LLMs), and AI agents. This includes creating an Environment as Code “blueprint” for each layer of the stack, which contains the code to provision those layers based on the dependencies among them.

Torque can then execute the code to provision these layers, thereby delivering the AI Agent. Since Torque manages all this code, the platform can also perform actions automatically, such as recurring training or data quality assurance tasks, and scale the infrastructure and data services to align resource utilization with workload needs. This eliminates manual work, ensures sufficient capacity, and optimizes costs by preventing expensive over-provisioning.

This approach also provides valuable monitoring and visibility so infrastructure, operations, and other teams responsible for maintaining the health of the Agentic AI solution can push updates, debug errors, and correct configuration drift quickly and easily.

For a brief overview, watch this video.

Both! Torque treats all infrastructure equally, regardless of where it’s hosted. This simplifies orchestration, delivery, and maintenance to reduce the time and budget spent on GPUs, data services, and other infrastructure supporting AI solutions.

Yes! Torque creates the code to define Nvidia AI Enterprise resources, such as frameworks and models, into reusable “building blocks” that can be orchestrated into functional environments supporting the AI tech stack.

Torque’s AI Copilot allows users to automate the orchestration of Nvidia AI Enterprise resources into ready-to-run blueprints for environments, which Torque can provision and manage continuously.

This reduces the learning curve and accelerates operations for teams that leverage Nvidia AI Enterprise Software to deliver their AI solutions.

Torque users can leverage any number of agents to deploy their GPU infrastructure, including AWS, Microsoft Azure, Nvidia DGX, Nebius, Coreweave, Oracle Cloud Infrastructure, and Kubernetes.

For questions about support for GPUs, book a demo with our team.